Unfolding trees breadth-first in Haskell

To visit a tree or graph in breadth-first order, there are two main implementation approaches: queue-based or level-based. Our goal here is to develop a level-based approach where the levels of the breadth-first walk are constructed compositionally and dynamically.

Compositionality means that for every node, its descendants—the other nodes reachable from it—are defined by composing the descendants of its children. Dynamism means that the children of a node are generated only when that node is visited; we will see that this requirement corresponds to asking for a monadic unfold.

A prior solution, using the Phases applicative functor,

is compositional but not dynamic in that sense. The essence of Phases

is a zipping operation in free applicative functors.

What if we did zipping in free monads instead?

This is a Literate Haskell post. The source code is on Gitlab. A reusable version of this code is now available on Hackage: the weave library.

Table of contents

- Background: breadth-first folds and traversals

- Problem statement: Breadth-first unfolds

- Queue-based unfold

- Some uses of unfolds

- “Global” level-based unfold

- In search of compositionality

- The weave applicative

- A wrinkle in time

- Out of sight, out of mind

- Laziness for the win

- Laziness without end

- Microbenchmarks: Queues vs Global Levels vs Weaves

- Conclusion

- Recap table

Extensions and imports for this Literate Haskell file

{-# LANGUAGE DeriveAnyClass #-}

{-# LANGUAGE DeriveTraversable #-}

{-# LANGUAGE DeriveGeneric #-}

{-# LANGUAGE GADTs #-}

{-# LANGUAGE LambdaCase #-}

{-# LANGUAGE PackageImports #-}

{-# LANGUAGE PatternSynonyms #-}

{-# LANGUAGE RankNTypes #-}

{-# LANGUAGE ScopedTypeVariables #-}

{-# LANGUAGE ViewPatterns #-}

{-# OPTIONS_GHC -Wno-x-partial -Wno-unused-matches -Wno-unused-top-binds -Wno-unused-imports #-}

import "deepseq" Control.DeepSeq (NFData)

import Data.Foldable (toList)

import Data.Function ((&))

import Data.Functor ((<&>))

import Data.Functor.Identity (Identity(..), runIdentity)

import GHC.Generics (Generic)

import "tasty" Test.Tasty (TestTree, localOption)

import "tasty-hunit" Test.Tasty.HUnit ((@?=), testCase)

import "tasty-bench" Test.Tasty.Bench (bgroup, bench, defaultMain, nf, bcompare)

-- import "tasty-bench" Test.Tasty.Bench (mutatorCpuTime)

import "tasty-expected-failure" Test.Tasty.ExpectedFailure (expectFail)

import "some" Data.Some.Newtype (Some(Some))

import "transformers" Control.Monad.Trans.State

import qualified "containers" Data.Set as Set

import "containers" Data.Set (Set)Background: breadth-first folds and traversals

Our running example will be the type of binary trees:

data Tree a = Leaf | Node a (Tree a) (Tree a)

deriving (Eq, Show, Generic, NFData)A breadth-first walk explores the tree level by level; every level contains the

nodes at the same distance from the root. The list of levels of a tree can be defined

recursively—it is a fold. For a tree Node x l r, the first level contains

just the root node x, and the subsequent levels are obtained by appending the

levels of the subtrees l and r pairwise.

levels :: Tree a -> [[a]]

levels Leaf = []

levels (Node x l r) = [x] : zipLevels (levels l) (levels r)zipLevels :: [[a]] -> [[a]] -> [[a]]

zipLevels [] yss = yss

zipLevels xss [] = xss

zipLevels (xs : xss) (ys : yss) = (xs ++ ys) : zipLevels xss yss(We can’t just use zipWith because it throws away the end of a list when the

other list is empty.)

Finally, we concatenate the levels together to obtain the list of nodes in breadth-first order.

toListBF :: Tree a -> [a]

toListBF = concat . levelsThanks to laziness, the list will indeed be produced by walking the tree in breadth-first order. So far so good.

The above function lets us fold a tree in breadth-first order. The next level of difficulty is to traverse a tree, producing a tree with the same shape as the original tree, only with modified labels.

traverseBF :: Applicative m => (a -> m b) -> Tree a -> m (Tree b)This has the exact same type as traverse, which you might obtain with

deriving (Foldable, Traversable). The stock-derived Traversable—enabled

by the DeriveTraversable extension—is a depth-first traversal, but the laws

of traverse don’t specify the order in which nodes should be visited,

so you could make it a breadth-first traversal if you wanted.

To define a breadth-first traversal is a surprisingly non-trivial exercise, as pointed out by Chris Okasaki in Breadth-first numbering: lessons from a small exercise in algorithm design (ICFP 2000).

“Breadth-first numbering” is a special case of “breadth-first traversal”

where the arrow (a -> m b) is specialized to a counter.

Okasaki presents a “numbering” solution based on queues and another solution

based on levels.

Both are easily adaptable to the more general “traversal” problem as we will

soon see.

There is a wonderful Discourse thread from 2024 on the topic of

breadth-first traversals.

The first post gives an elegant breadth-first numbering algorithm

which also appears in the appendix of Okasaki’s paper,

but sadly it does not generalize from “numbering” to

“traversal” beyond the special case m = State s.

Last but not least, another level-based solution to the breadth-first traversal

problem can be found in the

tree-traversals library by Noah Easterly.

It is built around an applicative transformer named Phases,

which is a list of actions—imagine the type “[m _]”—where each

element m _ represents one level of the tree.

The Phases applicative enables a compositional definition of a

breadth-first traversal, similarly to the levels function above:

the set of nodes reachable from the root is defined by combining the sets of

nodes reachable from its children. This concern of compositionality

is one of the main motivations behind this post.

Non-standard terminology

The broad family of algorithms being discussed is typically called

“breadth-first search” (BFS) or “breadth-first traversal”,

but in general these algorithms are not “searching” for anything,

and in Haskell, “traversal” is reserved for “things like traverse”.

Instead, this post will use “walks” as a term encompassing folds, traversals,

unfolds, or any concept that can be qualified with “breadth-first”.

Problem statement: Breadth-first unfolds

Both the fold toListBF and the traversal traverseBF had in common that they

receive a tree as an input. This explicit tree makes the notion of levels

“static”. With unfolds, we will have to deal with levels that exist only

“dynamically” as the result of unfolding the tree progressively.

To introduce the unfolding of a tree, it is convenient to introduce its “base functor”. We modify the tree type by replacing the recursive tree fields with an extra type parameter:

data TreeF a t = LeafF | NodeF a t t

deriving (Functor, Foldable, Traversable)An unfold generates a tree from a seed and a function which expands the seed into a leaf or a node containing more seeds. A pure unfold—or anamorphism—can be defined readily:

unfold :: (s -> TreeF a s) -> s -> Tree a

unfold f s = case f s of

LeafF -> Leaf

NodeF a l r -> Node a (unfold f l) (unfold f r)The order in which nodes are evaluated depends on

how the resulting tree is consumed. Hence unfold

is neither inherently “depth-first” nor “breadth-first”.

The situation changes if we make the unfold monadic.

unfoldM :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)An implementation of unfoldM must decide upon an ordering between actions.

To see why adding an M to unfold imposes an ordering,

contemplate the fact that these expressions have the same meaning:

Node a (unfold f l) (unfold f r)

= ( let tl = unfold f l in

let tr = unfold f r in

Node a tl tr )

= ( let tr = unfold f r in

let tl = unfold f l in

Node a tl tr )whereas these monadic expressions do not have the same meaning in general:

( unfoldM f l >>= \tl ->

unfoldM f r >>= \tr ->

pure (Node a tl tr) )

/=

( unfoldM f r >>= \tr ->

unfoldM f l >>= \tl ->

pure (Node a tl tr) )Without further requirements, there is an “obvious” definition of unfoldM,

which is a depth-first unfold:

unfoldM_DF :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_DF f s = f s >>= \case

LeafF -> pure Leaf

NodeF a l r -> liftA2 (Node a) (unfoldM_DF f l) (unfoldM_DF f r)We unfold the left subtree l fully before unfolding the right one r.

The problem is to define a breadth-first unfoldM.

If you want to think about this problem on your own, you can stop reading here. The rest of this post presents solutions.

Queue-based unfold

The two breadth-first numbering algorithms in Okasaki’s paper can

actually be generalized to breadth-first unfolds.

Here is the first one that uses queues (using the function (<+) for “push” and

pattern-matching on (:>) for “pop”):

unfoldM_BF_Q :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF_Q f b0 = go (b0 <+ Empty) <&> \case

_ :> t -> t

_ -> error "impossible"

where

go Empty = pure Empty

go (q :> b) = f b >>= \case

LeafF -> go q <&> \p -> Leaf <+ p

NodeF a b1 b2 -> go (b2 <+ b1 <+ q) <&> \case

p :> t1 :> t2 -> Node a t1 t2 <+ p

_ -> error "impossible"(The operator (<&>) is flip (<$>). I use it to avoid parentheses around

lambdas.)

Queue implementation for unfoldM_BF_Q

data Q a = Q [a] [a]

pattern Empty :: Q a

pattern Empty = Q [] []

infixr 1 <+

(<+) :: a -> Q a -> Q a

x <+ Q xs ys = Q (x : xs) ys

pop :: Q a -> Maybe (Q a, a)

pop (Q xs (y : ys)) = Just (Q xs ys, y)

pop (Q xs []) = case reverse xs of

[] -> Nothing

y : ys -> Just (Q [] ys, y)

infixl 1 :>

pattern (:>) :: Q a -> a -> Q a

pattern q :> y <- (pop -> Just (q, y))

{-# COMPLETE Empty, (:>) #-}As it happens, containers uses that queue-based technique to implement

breadth-first unfold for rose trees (Data.Tree.unfoldTreeM_BF).

There is a pending question of whether we can improve upon it.

This post might provide a theoretical alternative,

but it seems too slow to be worth serious consideration

(see the benchmark section).

If you’re frowning upon the use of error—as you should be—you can replace

error with dummy values here (Empty, Leaf), but

(1) that won’t be possible with tree structures that must be non-empty

(e.g., if Leaf contained a value) and (2) this is dead code, which

is harmless but no more elegant than making it obvious with error.

The correctness of this solution is also not quite obvious.

There are subtle ways to get this implementation wrong:

should the recursive call be b2 <+ b1 <+ q or b1 <+ b2 <+ q?

Should the pattern be p :> t1 :> t2 or p :> t2 :> t1?

For another version of this challenge, try implementing the unfold for another

tree type, such as finger trees or rose trees, without getting lost in the

order of pushes and pops (by the way, this is Data.Tree.unfoldTreeM_BF in

containers). The invariant is not complex but there is room for mistakes.

I believe that the compositional approach that will be presented later is more

robust on that front, although it is admittedly a subjective quality for which

is difficult to make a strong case.

Some uses of unfolds

Traversals from unfolds

One sense in which unfoldM is a more difficult problem than traverse is

that we can use unfoldM to implement traverse.

We do have to make light of the technicality that there is a Monad constraint

instead of Applicative, which makes unfoldM not suited to implement the

Traversable class.

A depth-first unfold gives a depth-first traversal:

traverse_DF :: Monad m => (a -> m b) -> Tree a -> m (Tree b)

traverse_DF = unfoldM_DF . traverseRoot

-- auxiliary function

traverseRoot :: Applicative m => (a -> m b) -> Tree a -> m (TreeF b (Tree a))

traverseRoot _ Leaf = pure LeafF

traverseRoot f (Node a l r) = f a <&> \b -> NodeF b l rA breadth-first unfold gives a breadth-first traversal:

traverse_BF_Q :: Monad m => (a -> m b) -> Tree a -> m (Tree b)

traverse_BF_Q = unfoldM_BF_Q . traverseRootUnfolds in graphs

We can use a tree unfold to explore a graph. This usage distinguishes unfolds from folds and traversals, which only let you explore trees.

Given a type of vertices V, a directed graph is represented by a function

V -> F V, where F is a functor which describes the arity of each node.

The obvious choice for F is lists, but we will stick to TreeF here

so we can just reuse this post’s unfoldM implementations.

The TreeF functor restricts us graphs where each node has zero or two

outgoing edges; it is a weird restriction, but we will make do for the sake of

example.

+-------+

v |

+->1--->2--->3 |

| | | ^ |

| v v | |

| 4--->5--->6--+

| | | ^

| +----|----+

| |

+-------+The graph drawn above turns into the following function, where every vertex

is mapped either to NodeF with the same vertex as the first argument followed

by its two adjacent vertices, or to LeafF if it has no outgoing edges or does

not belong to the graph.

graph :: Int -> TreeF Int Int

graph 1 = NodeF 1 2 4

graph 2 = NodeF 2 3 5

graph 3 = LeafF

graph 4 = NodeF 4 5 6

graph 5 = NodeF 5 1 6

graph 6 = NodeF 6 2 3

graph _ = LeafFIf we simply feed that function to unfold, we will get the infinite tree

of all possible paths from a chosen starting vertex.

To obtain a finite tree, we want to keep track of vertices that we have

already visited, using a stateful memory. The following function wraps graph,

returning LeafF also if a vertex has already been visited.

visitGraph :: Int -> State (Set Int) (TreeF Int Int)

visitGraph vertex = do

visited <- get

if vertex `elem` visited then pure LeafF

else do

put (Set.insert vertex visited)

pure (graph vertex)Applying unfoldM_BF to that function produces a “breadth-first tree”

of the graph, an encoding of the trajectory of a breadth-first walk through the

graph. “Breadth-first trees” are a concept from graph theory with well-studied

properties.

-- Visit `graph` in breadth-first order

bfGraph_Q :: Int -> Tree Int

bfGraph_Q = (`evalState` Set.empty) . unfoldM_BF_Q visitGraphtestGraphQ :: TestTree

testGraphQ = testCase "Q-graph" $

bfGraph_Q 1 @?=

Node 1

(Node 2 Leaf

(Node 5 Leaf Leaf))

(Node 4 Leaf (Node 6 Leaf Leaf))Compile and run

This post is a compilable Literate Haskell file. You can run all of the tests

and benchmarks in here. The source repository provides the necessary

configuration to build it with cabal.

$ cabal build breadth-first-unfoldsTest cases can then be selected with the -p option and a pattern

(see the tasty documentation for details).

Run all tests and benchmarks by passing no option.

$ cabal exec breadth-first-unfolds -- -p "/Q-graph/||/S-graph/"

All

Q-graph: OK

S-graph: OK“Global” level-based unfold

The other solution from Okasaki’s paper can also be adapted into a monadic unfold.

The starting point is to unfold a list of seeds [s] instead of a single seed:

we can traverse the list with the expansion function s -> m (TreeF a s) to

obtain another list of seeds, the next level of the breadth-first unfold,

and keep going.

Iterating this process naively yields a variant of monadic unfold without a

result. This no-result variant can be generalized from TreeF to

any foldable structure:

-- Inner loop: multi-seed unfold

unfoldsM_BF_G_ :: (Monad m, Foldable f) => (s -> m (f s)) -> [s] -> m ()

unfoldsM_BF_G_ f [] = pure ()

-- Read from right to left: traverse, flatten, recurse.

unfoldsM_BF_G_ f xs = unfoldsM_BF_G_ f . concatMap toList =<< traverse f xs

-- Top-level function: single-seed unfold

unfoldM_BF_G_ :: (Monad m, Foldable f) => (s -> m (f s)) -> s -> m ()

unfoldM_BF_G_ f = unfoldsM_BF_G_ f . (: [])Modifying this solution to create the output tree requires a little more thought.

We must keep hold of the intermediate list of ts :: [TreeF a s] to

reconstruct trees after the recursive call returns.

unfoldsM_BF_G :: Monad m => (s -> m (TreeF a s)) -> [s] -> m [Tree a]

unfoldsM_BF_G f [] = pure []

-- traverse, flatten, recurse, reconstruct

unfoldsM_BF_G f xs = traverse f xs >>= \ts ->

reconstruct ts <$> unfoldsM_BF_G f (concatMap toList ts)The reconstruction function picks a root in the first list and completes it with subtrees from the second list:

reconstruct :: [TreeF a s] -> [Tree a] -> [Tree a]

reconstruct (LeafF : ts) us = Leaf : reconstruct ts us

reconstruct (NodeF a _ _ : ts) (l : r : us) = Node a l r : reconstruct ts us

reconstruct _ _ = error "impossible"You could modify the final branch to produce [], but error makes it

explicit that this branch should never be reached by the unfold where it is

used.

The top-level unfold function wraps the seed in a singleton input list and extracts the root from a singleton output list.

unfoldM_BF_G :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF_G f = fmap head . unfoldsM_BF_G f . (: [])

Unit test testGraphG

bfGraph_G :: Int -> Tree Int

bfGraph_G = (`evalState` Set.empty) . unfoldM_BF_G visitGraphtestGraphG :: TestTree

testGraphG = testCase "Q-graph" $

bfGraph_G 1 @?=

Node 1

(Node 2 Leaf

(Node 5 Leaf Leaf))

(Node 4 Leaf (Node 6 Leaf Leaf))This solution is less brittle than the queue-based solution because

we always traverse lists left-to-right.

To avoid the uses of error in reconstruct,

you can probably create a specialized data structure in place of [TreeF a s],

but that is finicky in its own way.

In search of compositionality

Both of the solutions above (the queue-based and the “monolithic” level-based unfolds)

stem from a global view of breadth-first walks: we are iterating on a list or a

queue which holds all the seeds from one or two levels at a time.

That structure represents a “front line” between visited and unvisited

vertices, and every iteration advances the front line a little: with a queue we

advance it one vertex at a time, with a list we advance the whole front line

in an inner loop—one call to traverse—before recursing.

The opposite local view of breadth-first order is exemplified by the earlier

levels function: it only produces a list of lists of the vertices

reachable from the current root. It does so recursively, by composing

together the vertices reachable from its children. Our goal here is to find a

similarly local, compositional implementation of breadth-first unfolds.

Rather than defining unfoldM directly, which sequences the computations on

all levels into a single computation, we will introduce an intermediate

function weave that keeps levels separate—just as toListBF is defined

using levels.

The result of weave will be in an as yet unknown applicative functor F m

depending on m.

And because levels are kept separate, weave only needs

a constraint Applicative m to compose computations on the same level.

The goal is to implement this signature, where the result type F is also an

unknown:

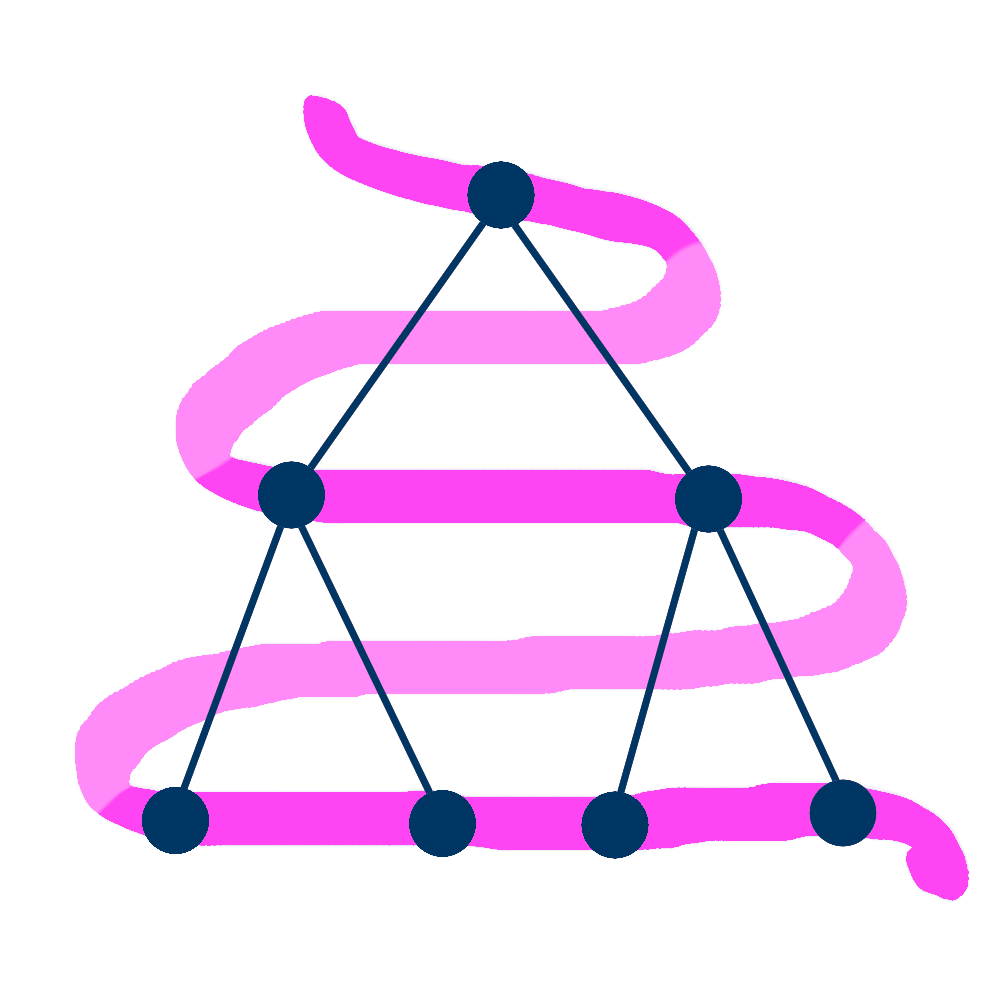

weave :: Applicative m => (s -> m (TreeF a s)) -> s -> F m (Tree a)The name weave comes from visualizing a breadth-first walk

as a path zigzagging across a tree like this:

which is reminiscent of weaving as in the making of textile:

With only what we know so far, a bit of type-directed programming leads to the

following incomplete definition. We have constructed something of type

m (F m (Tree a)), while we expect F m (Tree a):

weave :: Applicative m => (s -> m (TreeF a s)) -> s -> F m (Tree a)

weave f s = _ (step <$> f s) where

step :: TreeF a s -> F m (Tree a)

step LeafF = pure Leaf

step (NodeF a l r) = liftA2 NodeF (weave f l) (weave f r)To fill the hole _, we postulate the following primitive, weft,

as part of the unknown definition of F:

weft :: Applicative m => m (F m a) -> F m aIntuitively, F m represents “multi-level computations”.

The weft function constructs a multi-level (F m)-computation from

one level of m-computation which returns the subsequent levels

as an (F m)-computation.

We fill the hole with weft, completing the definition of weave:

weave :: forall m s a. Applicative m => (s -> m (TreeF a s)) -> s -> F m (Tree a)

weave f s = weft (weaveF <$> f s) where

weaveF :: TreeF a s -> F m (Tree a)

weaveF LeafF = pure Leaf

weaveF (NodeF a l r) = liftA2 (Node a) (weave f l) (weave f r)The function weave defines a multi-level computation which represents

a breadth-first walk from a seed s:

- the first level of the walk is

f s, expanding the initial seed; - the auxiliary function

weaveFconstructs the remaining levels from the initial seed’s expansion:- if the seed expands to

LeafF, there are no more seeds, and we terminate with an empty computation (pure); - if the seed expands to

NodeF, we obtain two sub-seedslandr, they generate their own weaves recursively (weave f landweave f r), and we compose them (liftA2).

- if the seed expands to

One way to think about weft is as a generalization of the following primitives:

we can “embed” m-computations into F m,

and we can “delay” multi-level (F m)-computations, shifting the

m-computation on each level to the next level.

embed :: Applicative m => m a -> F m a

embed u = weft (pure <$> u)

delay :: Applicative m => F m a -> F m a

delay u = weft (pure u)The key law relating these two operations is that embedded computations and delayed computations commute with each other:

embed u *> delay v = delay v <* embed uThe embed and delay operations are provided by the Phases applicative

functor that I mentioned earlier, which enables breadth-first traversals,

but not breadth-first unfolds. Thus, weft is a strictly more expressive

primitive than embed and delay.

Eventually, we will run a multi-level computation as a single m-computation

so that we can use weave to define unfoldM. The runner function will be

called mesh:

mesh :: Monad m => F m a -> m aIt is characterized by this law which says that mesh executes the first

level of the computation u :: m (F m a), then executes the remaining levels

recursively:

mesh (weft u) = u >>= meshPutting everything together, weave and mesh combine into a breadth-first unfold:

unfoldM_BF :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF f s = mesh (weave f s)It remains to find an applicative functor F equipped with weft and mesh.

The weave applicative

A basic approach to design a type is to make some of the operations it

should support into constructors. The weave applicative WeaveS has

constructors for pure and weft:

data WeaveS m a

= EndS a

| WeftS (m (WeaveS m a))(The suffix “S” stands for Spoilers. Read on!)

We instantiate the unknown functor F with WeaveS.

type F = WeaveSAstute readers will have recognized WeaveS as the free monad.

Just as Phases has the same type definition as the free applicative functor but

a different Applicative instance, we will give WeaveS an Applicative

instance that does not coincide with the Applicative and Monad instances of

the free monad.

Starting with the easy functions,

weft is WeftS, and the equation for mesh above is basically its definition.

We just need to add an equation for EndS.

weft :: m (WeaveS m a) -> WeaveS m a

weft = WeftS

mesh :: Monad m => WeaveS m a -> m a

mesh (EndS a) = pure a

mesh (WeftS u) = u >>= meshRecall that WeaveS represents multi-level computations.

Computations are composed level-wise with the following liftS2.

The interesting case is the one where both arguments are WeftS: we compose

the first level with liftA2, and the subsequent ones with liftS2

recursively.

liftS2 :: Applicative m => (a -> b -> c) -> WeaveS m a -> WeaveS m b -> WeaveS m c

liftS2 f (EndS a) wb = f a <$> wb

liftS2 f wa (EndS b) = flip f b <$> wa

liftS2 f (WeftS wa) (WeftS wb) = WeftS ((liftA2 . liftS2) f wa wb)liftS2 will be the liftA2 in WeaveS’s Applicative instance.

The Functor and Applicative instances show that WeaveS is an

applicative transformer: for every applicative functor m,

WeaveS m is also an applicative functor.

instance Functor m => Functor (WeaveS m) where

fmap f (EndS a) = EndS (f a)

fmap f (WeftS wa) = WeftS ((fmap . fmap) f wa)

instance Applicative m => Applicative (WeaveS m) where

pure = EndS

liftA2 = liftS2That completes the definition of unfoldM_BF: a level-based, compositional

breadth-first unfold.

As a unit test, we copy the code for visiting a graph from earlier:

bfGraphS :: Int -> Tree Int

bfGraphS = (`evalState` Set.empty) . unfoldM_BF visitGraphtestGraphS :: TestTree

testGraphS = testCase "S-graph" $

bfGraphS 1 @?=

Node 1

(Node 2 Leaf

(Node 5 Leaf Leaf))

(Node 4 Leaf (Node 6 Leaf Leaf))Code golf

There is a variant of weave that I prefer:

weaveS :: Applicative m => (s -> m (TreeF a s)) -> s -> m (WeaveS m (Tree a))

weaveS f s = f s <&> \case

LeafF -> pure Leaf

NodeF a l r -> liftA2 (Node a) (weft (weaveS f l)) (weft (weaveS f r))The outer weft constructor was moved into the recursive calls.

The result type has an extra m, which makes it more apparent that

we always start with a call to f. It’s the same vibe as replacing the type

[a] with NonEmpty a when we know that a list will always have at least one

element; weaveS always produces at least one level of computation.

We also replace (<$>) with its flipped version (<&>) for aesthetic reasons:

we can apply it to a lambda without parentheses, and that change makes the

logic flow naturally from left to right: we first expand the seed s using

f, and continue depending on whether the expansion produced LeafF or NodeF.

To define unfoldM, instead of applying mesh directly, we chain it with

(>>=).

unfoldM_BF_S :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF_S f s = weaveS f s >>= meshA wrinkle in time

That solution is Obviously Correct™, but it has a terrible flaw: it does not run in linear time!

We can demonstrate this by generating a “thin” tree whose height

is equal to its size.

The height h is the seed of the unfolding, and we generate a NodeF as long

as it is non-zero, asking for a decreased height h - 1 on the right,

and a zero height on the left.

thinTreeS :: Int -> Tree ()

thinTreeS = runIdentity . unfoldM_BF_S f

where

f 0 = pure LeafF

f h = pure (NodeF () 0 (h - 1))Compare the running times of evaluating thinTreeS at height 100

(the baseline)

and at height 1000 (10x the baseline).

benchS :: TestTree

benchS = bgroup "S-thin"

[ bench "1x" (nf thinTreeS 100)

, bench "10x" (nf thinTreeS 1000) & bcompare "S-thin.1x"

]Benchmark output (relative):

| height | time |

|---|---|

| baseline | 1x |

| 10x | 105x |

Raw output

$ cabal exec breadth-first-unfolds -- -p "S-thin"

All

S-thin

1x: OK

27.6 μs ± 2.6 μs, 267 KB allocated, 317 B copied, 6.0 MB peak memory

10x: OK

2.90 ms ± 181 μs, 23 MB allocated, 178 KB copied, 7.0 MB peak memory, 105.35xMultiplying the height by 10x makes the function run 100x slower. Dramatically quadratic.

Complexity analysis

We can compare this implementation with level from earlier, which is linear-time.

In particular, looking at zipLevels with liftS2—which play similar

roles—there is a crucial difference when one of the arguments is empty

([] or EndS):

zipLevels simply returns the other argument, whereas liftS2 calls (<$>),

continuing the recursion down the other argument.

So zipLevels stops working after reaching the end of either argument, whereas

liftS2 walks to the end of both arguments. There is at least one

call to liftS2 on every level which will walk to the bottom of the tree,

so we get a quadratic lower bound Ω(height2).

Out of sight, out of mind

The problematic combinators are fmap and liftS2, which weaveS uses to

construct the unfolded tree. If we don’t care about that tree—wanting only

the effect of a monadic unfold—then we can get rid of the complexity

associated with those combinators.

With no result to return, we remove the a type parameter from the definition

of WeaveS, yielding the oblivious (“O”) variant:

data WeaveO m

= EndO

| WeftO (m (WeaveO m))We rewrite mesh into meshO, reducing a WeaveO m computation

into m () instead of m a.

meshO :: Monad m => WeaveO m -> m ()

meshO EndO = pure ()

meshO (WeftO u) = u >>= meshOThe Applicative instance for WeaveS becomes a Monoid instance for WeaveO.

liftA2 is replaced with (<>), zipping two computations level-wise.

instance Applicative m => Semigroup (WeaveO m) where

EndO <> v = v

u <> EndO = u

WeftO u <> WeftO v = WeftO (liftA2 (<>) u v)

instance Applicative m => Monoid (WeaveO m) where

mempty = EndO

mappend = (<>)To implement a breadth-first walk, we modify weaveS above by replacing

liftA2 (Node a) with (<>). Note that the type parameter a is no longer in

the result. It was only used in the tree that we decided to forget.

weaveO :: Applicative m => (s -> m (TreeF a s)) -> s -> m (WeaveO m)

weaveO f s = f s <&> \case

LeafF -> mempty

NodeF _ l r -> WeftO (weaveO f l) <> WeftO (weaveO f r)Running weaveO with meshO yields a oblivious monadic unfold:

it produces m () instead of m (Tree a).

(You may remember seeing another implementation of that same signature

just earlier, unfoldM_BF_G_.)

unfoldM_BF_O_ :: Monad m => (s -> m (TreeF a s)) -> s -> m ()

unfoldM_BF_O_ f s = weaveO f s >>= meshOPreviously, we benchmarked the function thinTreeS that outputs a tree by

forcing the tree. With an oblivious unfold, there is no tree to force.

Instead we will count the number of generated NodeF constructors:

thinTreeO :: Int -> Int

thinTreeO = (`execState` 0) . unfoldM_BF_O_ (state . f)

where

f 0 counter = (LeafF, counter)

f h counter = (NodeF () 0 (h - 1), counter + 1) -- increment the counter for every NodeFWe adapt the benchmark from before to measure the complexity of unfolding thin trees. We have to increase the baseline height from 100 to 500 because this benchmark runs so much faster than the previous ones.

benchO :: TestTree

benchO = bgroup "O-thin"

[ bench "1x" (nf thinTreeO 500)

, bench "10x" (nf thinTreeO 5000) & bcompare "O-thin.1x"

]Benchmark output (relative):

| height | time |

|---|---|

| baseline | 1x |

| 10x | 9.8x |

Raw output

$ cabal exec breadth-first-unfolds -- -p O-thin

All

O-thin

1x: OK

148 μs ± 8.3 μs, 543 KB allocated, 773 B copied, 6.0 MB peak memory

10x: OK

1.45 ms ± 113 μs, 5.4 MB allocated, 82 KB copied, 7.0 MB peak memory, 9.78xThe growth is linear, as desired: the “10x” bench is 10x slower than the baseline “1x” bench.

Laziness for the win

The oblivious unfold avoided quadratic explosion by simplifying the problem.

Now let’s solve the original problem again,

so we can’t just get rid of fmap and liftA2.

As mentioned previously, the root cause was that (1) liftA2 calls fmap when

one of the constructors is EndS, and (2) fmap traverses the other argument.

The next solution will be to make fmap take constant time,

by storing the “mapped function” in the constructor.

Behold the “L” variant of WeaveS, which is a GADT:

data WeaveL m a where

EndL :: a -> WeaveL m a

WeftL :: m (WeaveL m b) -> (b -> a) -> WeaveL m aFor comparison, here is the previous “S” variant with GADT syntax:

data WeaveS m a where

EndS :: a -> WeaveS m a

WeftS :: m (WeaveS m a) -> WeaveS m aThis trick is also known as the “co-Yoneda construction”.

The definition of fmap is no longer recursive.

It doesn’t even need m to be a functor anymore!

instance Functor (WeaveL m) where

fmap f (EndL a) = EndL (f a)

fmap f (WeftL wa g) = WeftL wa (f . g)The Applicative instance is… a good exercise for the reader.

The details are not immediately important—we only care about improving fmap

for now—we will come back to have a look at the Applicative instance soon.

The runner function meshL is a simple bit of type Tetris.

meshL :: Monad m => WeaveL m a -> m a

meshL (EndL a) = pure a

meshL (WeftL wa f) = f <$> (wa >>= meshL)By partially applying WeftL to id as its second argument,

we obtain a counterpart to the unary WeftS constructor:

weftL :: m (WeaveL m a) -> WeaveL m a

weftL wa = WeftL wa idWith those primitives redefined, the “weave” and “unfold” are identical. Below, we only renamed the “S” suffixes to “L”:

weaveL :: Applicative m => (s -> m (TreeF a s)) -> s -> m (WeaveL m (Tree a))

weaveL f s = f s <&> \case

LeafF -> pure Leaf

NodeF a s1 s2 -> liftA2 (Node a) (weftL (weaveL f s1)) (weftL (weaveL f s2))

unfoldM_BF_L :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF_L f s = weaveL f s >>= meshLThe benchmarks show that 10x the height takes 10x the time. Linear growth again.

Benchmark code and output: thinTreeL and benchL

Copy of the benchS benchmark.

thinTreeL :: Int -> Tree ()

thinTreeL = runIdentity . unfoldM_BF_L f

where

f 0 = pure LeafF

f h = pure (NodeF () 0 (h - 1))

benchL :: TestTree

benchL = bgroup "L-thin"

[ bench "1x" (nf thinTreeL 100)

, bench "10x" (nf thinTreeL 1000) & bcompare "L-thin.1x"

]Benchmark output (relative):

| height | time |

|---|---|

| baseline | 1x |

| 10x | 9.93x |

Raw output:

$ cabal exec breadth-first-unfolds -- -p "L-thin"

All

L-thin

1x: OK

14.1 μs ± 782 ns, 59 KB allocated, 5 B copied, 6.0 MB peak memory

10x: OK

140 μs ± 13 μs, 586 KB allocated, 51 B copied, 6.0 MB peak memory, 9.93xLazy in more ways than one

As hinted by the “L” and “S” suffixes,

WeaveL is a “lazy” variant of WeaveS: fmap for WeaveL “postpones”

work by accumulating functions in the WeftL constructor.

That work is “forced” by meshL, which is where the fmap ((<$>)) of the

underlying monad m is called, performing the work accumulated

by possibly many calls to WeaveL’s fmap.

One subtlety is that there are multiple “lazinesses” at play.

The main benefit of using WeaveL is really to delay computation,

that is a kind of laziness, but WeaveL doesn’t need to be

implemented in a lazy language.

We can rewrite all of the code we’ve seen so far in a strict language

with minor changes, and we will still observe the quadratic vs linear behavior

of WeaveS vs WeaveL on thin trees.

The “manufactured laziness” of WeaveL is a concept independent of the

“ambient laziness” in Haskell.

Nevertheless, we can still find an interesting role for that “ambient laziness”

in this story. Indeed, the function weaveL also happens to be lazier than

weaveS in the usual sense.

A concrete test case is worth a thousand words. Consider the following

tree generator which keeps unfolding left subtrees while making

every right subtree undefined:

partialTreeF :: Bool -> TreeF () Bool

partialTreeF True = NodeF () True False

partialTreeF False = undefinedIf we used the pure unfold, we would get the same tree as this

recursive definition:

partialTree :: Tree ()

partialTree = Node () partialTree undefinedWhat happens if we use one of the monadic unfolds? For example

unfoldM_BF_S:

partialTreeS :: Tree ()

partialTreeS = runIdentity (unfoldM_BF_S (Identity . partialTreeF) True)Try to force the first Node constructor.

whnfTreeS :: TestTree

whnfTreeS = expectFail $ testCase "S-whnf" $ do

case partialTreeS of

Node _ _ _ -> pure () -- Succeed

Leaf -> error "unreachable" -- definitely not a LeafAs it turns out, this test using the “S” variant fails. (That’s

why the test is marked with expectFail.)

Forcing partialTreeS evaluates the undefined in partialTreeF.

Therefore partialTreeS is not equivalent to partialTree.

$ cabal exec breadth-first-unfolds -- -p "S-whnf"

All

S-whnf: FAIL (expected)

Exception: Prelude.undefined

CallStack ...In contrast, the “L” variant makes that same test succeed.

partialTreeL :: Tree ()

partialTreeL = runIdentity (unfoldM_BF_L (Identity . partialTreeF) True)

whnfTreeL :: TestTree

whnfTreeL = testCase "L-whnf" $ do

case partialTreeL of

Node _ _ _ -> pure () -- Succeed

Leaf -> error "unreachable"Test output:

$ cabal exec breadth-first-unfolds -- -p "L-whnf"

All

L-whnf: OKThis difference can only be seen with “lazy monads”, where (>>=) is

lazy in its first argument.

(If this definition sounds not quite right, that’s probably because of seq.

It makes a precise definition of “lazy monad” more complicated.)

Examples of lazy monads from the transformers library

are Identity, Reader, lazy State, lazy Writer, and Accum.

The secret sauce is the definition of liftA2 for WeaveL:

instance Applicative m => Applicative (WeaveL m) where

pure = EndL

liftA2 f (EndL a) wb = f a <$> wb

liftA2 f wa (EndL b) = flip f b <$> wa

liftA2 f (WeftL wa g) (WeftL wb h)

= WeftL ((liftA2 . liftA2) (,) wa wb) (\ ~(a, b) -> f (g a) (h b))In the third clause of liftA2, we put the function f in a lambda with a

lazy pattern (~(a, b)) directly under the topmost constructor WeftL.

Thus, we can access the result of f from the second field of WeftL

without looking at the first field. In liftS2 earlier, f was

passed as an argument to (liftA2 . liftS2), that forces us to run the

computation before we can get a hold on the result of f.

Maximizing laziness

The “L” variant of unfoldM is lazier than the “S” variant,

but there is still a gap between partialTreeL and the pure partialTree:

if we force not only the root, but also the left subtree of partialTreeL,

then we run into undefined again.

forceLeftTreeL :: TestTree

forceLeftTreeL = expectFail $ testCase "L-left" $ do

case partialTreeL of

Node _ (Node _ _ _) _ -> pure () -- Succeed

_ -> error "unreachable"Test output:

$ cabal exec breadth-first-unfolds -- -p "L-left"

All

L-left: FAIL (expected)

Exception: Prelude.undefinedAlthough the unfold using WeaveL is lazier than using WeaveS,

it is not yet as lazy as it could be.

The reason is that, strictly speaking, WeaveL’s liftA2 is a strict function.

The expansion function partialTreeF produces a level with an undefined

sub-computation, which crashes the whole level.

Each level in a computation will be either completely defined or undefined.

To recap, we’ve been looking at the following trees:

partialTreeS = undefined

partialTreeL = Node () undefined undefined

partialTree = Node () partialTree undefinedIt is natural to ask: can we define a breadth-first unfold that, when applied

to partialTreeF, will yield the same tree as partialTree?

More generally, the new problem is to define a breadth-first unfoldM

whose specialization with the Identity functor is equivalent to

the pure unfold even on partially-defined values. That is, it satisfies

the following equation:

unfold f = runIdentity . unfoldM (Identity . f)Laziness without end

The strictness of liftA2 is caused by WeaveL having two constructors.

Let’s get rid of EndL.

data WeaveE m a where

WeftE :: m (WeaveE m b) -> (b -> a) -> WeaveE m aHaving only one constructor lets us use lazy patterns:

instance Functor (WeaveE m) where

fmap f ~(WeftE wa g) = WeftE wa (f . g)Wait a second. I spoke too fast, GHC gives us an error:

error: [GHC-87005]

• An existential or GADT data constructor cannot be used

inside a lazy (~) pattern

• In the pattern: WeftE wa g

In the pattern: ~(WeftE wa g)

In an equation for ‘fmap’: fmap f ~(WeftE wa g) = WeftE wa (f . g)

|

641 | > fmap f ~(WeftE wa g) = WeftE wa (f . g)

| ^^^^^^^^^^The feature we need is “first-class existentials”, for which there is an open GHC proposal.

Not letting that stop us, there is a simple version of first-class existentials

available in the package some,

as the module Data.Some.Newtype (internally using unsafeCoerce).

That will be sufficient for our purposes.

All we need is an abstract type Some and a pattern synonym:

-- imported from Data.Some.Newtype

data Some f

pattern Some :: f a -> Some fAnd we’re back on track. Here comes the actual “E” (endless) variant:

newtype WeaveE m a = MkWeaveE (Some (WeavingE m a))

data WeavingE m a b where

WeftE :: m (WeaveE m b) -> (b -> a) -> WeavingE m a bI spare you the details.

Functor, Applicative, weftE, meshE

instance Functor (WeaveE m) where

fmap f (MkWeaveE (Some ~(WeftE u g))) = MkWeaveE (Some (WeftE u (f . g)))

instance Applicative m => Applicative (WeaveE m) where

pure x = MkWeaveE (Some (WeftE (pure (pure ())) (\_ -> x)))

liftA2 f (MkWeaveE (Some ~(WeftE u g))) (MkWeaveE (Some ~(WeftE v h)))

= MkWeaveE (Some (WeftE ((liftA2 . liftA2) (,) u v) (\ ~(x, y) -> f (g x) (h y))))

weftE :: m (WeaveE m a) -> WeaveE m a

weftE u = MkWeaveE (Some (WeftE u id))

meshE :: Monad m => WeaveE m a -> m a

meshE (MkWeaveE (Some (WeftE u f))) = f <$> (u >>= meshE)

Breadth-first unfold, “E” variant: weaveE and unfoldM_BF_E

weaveE :: Applicative m => (s -> m (TreeF a s)) -> s -> m (WeaveE m (Tree a))

weaveE f s = f s <&> \case

LeafF -> pure Leaf

NodeF a s1 s2 -> liftA2 (Node a) (weftE (weaveE f s1)) (weftE (weaveE f s2))

unfoldM_BF_E :: Monad m => (s -> m (TreeF a s)) -> s -> m (Tree a)

unfoldM_BF_E f s = weaveE f s >>= meshEThe endless WeaveE enables an even lazier implementation of unfoldM.

When specialized to the identity monad, it lets us force the resulting

tree in any order. The forceLeftTreeE test passes (unlike forceLeftTreeL).

partialTreeE :: Tree ()

partialTreeE = runIdentity (unfoldM_BF_E (Identity . partialTreeF) True)

forceLeftTreeE :: TestTree

forceLeftTreeE = testCase "E-left" $ do

case partialTreeE of

Node _ (Node _ _ _) _ -> pure () -- Succeed

_ -> error "unreachable"Test output:

$ cabal exec breadth-first-unfolds -- -p "E-left"

All

E-left: OKOne can also check that forcing the left spine of partialTreeE

arbitrarily deep throws no errors.

We made it lazy, but at what cost?

First, this “Endless” variant only works for lazy monads.

With a strict monad, the runner meshE will loop forever.

It is possible to run things more incrementally by pattern-matching on

WeaveE, but you’re better off using the oblivious WeaveO anyway.

Second, when you aren’t running into an unproductive loop, the “Endless” variant of

unfoldM has quadratic time complexity Ω(height2). The reason

is essentially the same as the “Strict” variant: liftA2 keeps looping even if

one argument is a pure weave—before, that was to traverse the other

non-pure argument, now, there isn’t even a way to tell when the computation

has ended.

Thus, every leaf may create work proportional to the height of the tree.

Running the same benchmark as before, we measure even more baffling timings:

| height | time |

|---|---|

| baseline | 1x |

| 10x | 738x |

Benchmark: thinTreeE and benchE

thinTreeE :: Int -> Tree ()

thinTreeE = runIdentity . unfoldM_BF_E f

where

f 0 = pure LeafF

f h = pure (NodeF () 0 (h - 1))

benchE :: TestTree

benchE = {- localOption mutatorCpuTime $ -} bgroup "E-thin"

[ bench "1x" (nf thinTreeE 100)

, bench "10x" (nf thinTreeE 1000) & bcompare "E-thin.1x"

]Raw output:

$ cabal exec breadth-first-unfolds -- -p "E-thin."

All

E-thin

1x: OK

243 μs ± 22 μs, 1.2 MB allocated, 13 KB copied, 6.0 MB peak memory

10x: OK

179 ms ± 17 ms, 119 MB allocated, 29 MB copied, 21 MB peak memory, 737.76xUsing the previous setup comparing a baseline and a 10x run, we see a more than 700x slowdown, so much worse than the 100x predicted by a quadratic model. Interestingly, the raw output shows that the total cumulative allocations did grow by a 100x factor.1

But it gets weirder with more data points: it does not follow a clear power law. If Time(n) grew as nc for some fixed exponent c, then the ratio Time(Mn)/Time(n) would be Mc, a constant that does not depend on n.

In the following benchmark, we keep doubling the height (M = 2) for every test case, and we measure the time relative to the preceding case each time. A quadratic model predicts a 4x slowdown at every step. Instead, we observe wildly varying factors.

Benchmark output (each time factor is relative to the preceding line, for example, the “4x” benchmark is 9.5x slower than the “2x” benchmark):

| height | time |

|---|---|

| 1x | |

| 2x | 10.9x |

| 4x | 9.5x |

| 8x | 5.4x |

| 16x | 1.4x |

Code and raw output

benchE' :: TestTree

benchE' = {- localOption mutatorCpuTime $ -} bgroup "E-thin-more"

[ bench "1x" (nf thinTreeE 100)

, bench "2x" (nf thinTreeE 200) & bcompare "E-thin-more.1x"

, bench "4x" (nf thinTreeE 400) & bcompare "E-thin-more.2x"

, bench "8x" (nf thinTreeE 800) & bcompare "E-thin-more.4x"

, bench "16x" (nf thinTreeE 1000) & bcompare "E-thin-more.8x"

]$ cabal exec breadth-first-unfolds -- -p "E-thin-more"

All

E-thin-more

1x: OK

222 μs ± 9.3 μs, 1.2 MB allocated, 13 KB copied, 6.0 MB peak memory

2x: OK

2.43 ms ± 85 μs, 4.8 MB allocated, 236 KB copied, 7.0 MB peak memory, 10.94x

4x: OK

23.1 ms ± 1.2 ms, 19 MB allocated, 2.7 MB copied, 10 MB peak memory, 9.53x

8x: OK

126 ms ± 7.8 ms, 76 MB allocated, 18 MB copied, 24 MB peak memory, 5.44x

16x: OK

181 ms ± 7.0 ms, 119 MB allocated, 30 MB copied, 24 MB peak memory, 1.44xI believe this benchmark is triggering some pathological behavior in the garbage

collector. I modified tasty-bench with an option to measure CPU time without GC

(mutator time). At time of writing, tasty-bench is still waiting for a new release.

We can point Cabal to an unreleased commit of tasty-bench by adding the following

lines to cabal.project.local.

source-repository-package

type: git

location: https://github.com/Bodigrim/tasty-bench.git

tag: 81ff742a3db1d514461377729e00a74e5a9ac1b8Then, uncomment the setting “localOption mutatorCpuTime $” in benchE and

benchE' above and uncomment the import of mutatorCpuTime at the top.

Benchmark output (excluding GC time, relative):

| height | time |

|---|---|

| baseline | 1x |

| 1x | 95x |

Raw output

$ cabal exec breadth-first-unfolds -- -p "E-thin."

All

E-thin

1x: OK

216 μs ± 18 μs, 1.2 MB allocated, 13 KB copied, 6.0 MB peak memory

10x: OK

20.5 ms ± 1.9 ms, 119 MB allocated, 29 MB copied, 21 MB peak memory, 94.91xFor the “2x” benchmarks, we are closer the expected 4x slowdown, but there is still a noticeable gap. I’m going to chalk the rest to inherent measurement errors (the cost of tasty-bench’s simplicity) exacerbated by the pathological GC behavior; a possible explanation is that the pattern of memory usage becomes so bad that it affects non-GC time.

Benchmark output (excluding GC time, each measurement is relative to the preceding line):

| height | time |

|---|---|

| 1x | |

| 2x | 3.2x |

| 4x | 4.2x |

| 8x | 4.5x |

| 16x | 1.7x |

Raw output

$ cabal exec breadth-first-unfolds -- -p "E-thin-more"

All

E-thin-more

1x: OK

186 μs ± 16 μs, 1.2 MB allocated, 13 KB copied, 21 MB peak memory

2x: OK

597 μs ± 28 μs, 4.8 MB allocated, 236 KB copied, 21 MB peak memory, 3.20x

4x: OK

2.48 ms ± 148 μs, 19 MB allocated, 2.9 MB copied, 21 MB peak memory, 4.15x

8x: OK

11.2 ms ± 986 μs, 76 MB allocated, 18 MB copied, 24 MB peak memory, 4.50x

16x: OK

18.4 ms ± 1.7 ms, 119 MB allocated, 29 MB copied, 24 MB peak memory, 1.65xIt doesn’t seem possible for a breadth-first unfold to be both maximally lazy and of linear time complexity, but I don’t know how to formally prove that impossibility either.

Microbenchmarks: Queues vs Global Levels vs Weaves

So far we’ve focused on asymptotics (linear vs quadratic). Some readers will inevitably wonder about real speed. Among the linear-time algorithms—queues (“Q”), global levels (“G”), and weaves (lazy “L” or oblivious “O”)—which one is faster?

tl;dr: Queues are (much) faster in these microbenchmarks (up to 25x!), but keep in mind that these are all quite naive implementations.

There are two categories to measure separately: unfolds which produce trees,

and oblivious unfolds—which don’t produce trees. These microbenchmarks

construct full trees up to a chosen number of nodes. When there is an

output tree, we force it (using nf), otherwise we force a counter of the

number of nodes. We run on different sufficiently large sizes (500 and 5000)

to check the stability of the measured factors, ensuring that we are only

comparing the time components that dominate at scale.

The tables list times relative to the queue benchmark for each tree size.

Tree-producing unfolds

| algorithm | size | time |

|---|---|---|

| Queue | 500 | 1x |

| Global Levels | 500 | 1.4x |

| Lazy Weave | 500 | 3.1x |

| Queue | 5000 | 1x |

| Global Levels | 5000 | 1.2x |

| Lazy Weave | 5000 | 3.3x |

Code and raw output

fullTreeF :: Int -> Int -> TreeF Int Int

fullTreeF size n | n >= size = LeafF

fullTreeF size n = NodeF n (2 * n) (2 * n + 1)

fullTree_Q :: Int -> Tree Int

fullTree_Q size = runIdentity (unfoldM_BF_Q (Identity . fullTreeF size) 1)

fullTree_G :: Int -> Tree Int

fullTree_G size = runIdentity (unfoldM_BF_G (Identity . fullTreeF size) 1)

fullTree_L :: Int -> Tree Int

fullTree_L size = runIdentity (unfoldM_BF_L (Identity . fullTreeF size) 1)

fullTree :: TestTree

fullTree = bgroup "fullTree"

[ bench "Q-1x" (nf fullTree_Q 500)

, bench "G-1x" (nf fullTree_G 500) & bcompare "fullTree.Q-1x"

, bench "L-1x" (nf fullTree_L 500) & bcompare "fullTree.Q-1x"

, bench "Q-10x" (nf fullTree_Q 5000)

, bench "G-10x" (nf fullTree_G 5000) & bcompare "fullTree.Q-10x"

, bench "L-10x" (nf fullTree_L 5000) & bcompare "fullTree.Q-10x"

]$ cabal exec breadth-first-unfolds -- -p fullTree

All

fullTree

Q-1x: OK

20.6 μs ± 1.1 μs, 141 KB allocated, 477 B copied, 6.0 MB peak memory

G-1x: OK

28.6 μs ± 2.4 μs, 223 KB allocated, 928 B copied, 6.0 MB peak memory, 1.39x

L-1x: OK

64.3 μs ± 5.6 μs, 353 KB allocated, 3.7 KB copied, 6.0 MB peak memory, 3.13x

Q-10x: OK

287 μs ± 26 μs, 1.5 MB allocated, 57 KB copied, 7.0 MB peak memory

G-10x: OK

349 μs ± 30 μs, 2.2 MB allocated, 94 KB copied, 7.0 MB peak memory, 1.22x

L-10x: OK

935 μs ± 73 μs, 3.5 MB allocated, 386 KB copied, 7.0 MB peak memory, 3.25xOblivious unfolds

| algorithm | size | time |

|---|---|---|

| Queue | 500 | 1x |

| Global Levels | 500 | 11x |

| Oblivious Weave | 500 | 25x |

| Queue | 5000 | 1x |

| Global Levels | 5000 | 10x |

| Oblivious Weave | 5000 | 24x |

Code and raw output

unfoldM_BF_Q_ :: Monad m => (s -> m (TreeF a s)) -> s -> m ()

unfoldM_BF_Q_ f s0 = unfoldM_f (s0 <+ Empty)

where

unfoldM_f (q :> s) = f s >>= \case

LeafF -> unfoldM_f q

NodeF _ l r -> unfoldM_f (r <+ l <+ q)

unfoldM_f Empty = pure ()eatFullTree_Q :: Int -> Int

eatFullTree_Q size = (`execState` 0) (unfoldM_BF_Q_ (state . \n c -> (fullTreeF size n, c + 1)) 1)

eatFullTree_G :: Int -> Int

eatFullTree_G size = (`execState` 0) (unfoldM_BF_G_ (state . \n c -> (fullTreeF size n, c + 1)) 1)

eatFullTree_O :: Int -> Int

eatFullTree_O size = (`execState` 0) (unfoldM_BF_O_ (state . \n c -> (fullTreeF size n, c + 1)) 1)

eatFullTree :: TestTree

eatFullTree = bgroup "eatFullTree"

[ bench "Q-1x" (nf eatFullTree_Q 500)

, bench "G-1x" (nf eatFullTree_G 500) & bcompare "eatFullTree.Q-1x"

, bench "W-1x" (nf eatFullTree_O 500) & bcompare "eatFullTree.Q-1x"

, bench "Q-10x" (nf eatFullTree_Q 5000)

, bench "G-10x" (nf eatFullTree_G 5000) & bcompare "eatFullTree.Q-10x"

, bench "W-10x" (nf eatFullTree_O 5000) & bcompare "eatFullTree.Q-10x"

]$ cabal exec breadth-first-unfolds -- -p eatFullTree

All

eatFullTree

Q-1x: OK

11.0 μs ± 724 ns, 78 KB allocated, 338 B copied, 6.0 MB peak memory

G-1x: OK

116 μs ± 11 μs, 379 KB allocated, 1.3 KB copied, 6.0 MB peak memory, 10.57x

W-1x: OK

278 μs ± 14 μs, 830 KB allocated, 5.9 KB copied, 6.0 MB peak memory, 25.36x

Q-10x: OK

120 μs ± 11 μs, 781 KB allocated, 21 KB copied, 6.0 MB peak memory

G-10x: OK

1.23 ms ± 122 μs, 3.9 MB allocated, 109 KB copied, 7.0 MB peak memory, 10.27x

W-10x: OK

2.92 ms ± 255 μs, 8.4 MB allocated, 631 KB copied, 7.0 MB peak memory, 24.43xConclusion

I hope to have piqued your interest in breadth-first unfolds without

using queues.

To the best of my knowledge, this specific problem hasn’t been studied in the

literature. It is of course related to breadth-first traversals,

previously solved using the Phases applicative.2

The intersection of functional programming and breadth-first walks is a small

niche, which makes it quick to survey that corner of the world for any related

ideas to those presented here.

The paper Modular models of monoids with operations by Zhixuan Yang

and Nicolas Wu, in ICFP 2023, mentions a general construction of Phases as an

example application of their theory. Basically, Phases is defined by a

fixed-point equation:

Phases f = Day f Phases :+: IdentityWe can express Phases abstractly as a least fixed-point

μx.f▫x + Id in any monoidal category with a suitable structure.

If we instantiate the monoidal product ▫ not with Day convolution,

but with functor composition (Compose), then we get Weave.

In another coincidence, the monad-coroutine package

implements a weave function which is a generalization of

liftS2—this may require some squinting.

While WeaveS as a data type coincides with the free monad Free,

monad-coroutine’s core data type Coroutine coincides

with the free monad transformer FreeT.

We can view Phases as a generalization of “zipping” from

lists to free applicatives—which are essentially lists of actions,

and Weave generalizes that further to free monads. To recap, the surprise was

that the naive data type of free monads results in a quadratic-time unfold.

That issue motivated a “lazy” variant3 which achieves a linear-time

breadth-first unfold. That in turn suggested an even “lazier” variant which

enables more control on evaluation order at the cost of efficiency.

I’ve just released the weave library which implements the main ideas of this post. I don’t expect it to have many users, given how much slower it is compared to queue-based solutions. But I would be curious to find a use case for the new compositionality afforded by this abstraction.

Recap table

| Unfolds | Time | Laziness | Compositional | |

|---|---|---|---|---|

| Phases* | No | linear† | by levels | Yes |

| Queue (Q) | Yes | linear† | strict | No |

| Global Levels (G) | Yes | linear† | by levels | No |

| Strict Weave (S) | Yes | quadratic‡ | strict | Yes |

| Oblivious Weave (O) | Oblivious only | linear† | N/A | Yes |

| Lazy Weave (L) | Yes | linear† | by levels | Yes |

| Endless Weave (E) | Yes | quadratic‡E | maximally lazy◊ | Yes |

†Linear wrt. size: Θ(size).

‡Quadratic wrt. height: lower bound Ω(height2), upper bound O(height × size).

EThe “Endless” meshE only terminates with lazy monads.

*I guess there exists an “endless Phases” variant, that

would be quadratic and maximally lazy.

◊The definition of “maximally lazy” in this post actually misses a

range of possible lazy behaviors with monads other than Identity. A further

refinement seems to be another can of worms.

The main action of this Literate Haskell program

main :: IO ()

main = defaultMain

[ testGraphQ

, testGraphG

, testGraphS

, testGraphL

, testGraphE

, whnfTreeQ

, whnfTreeS

, whnfTreeL

, whnfTreeE

, forceLeftTreeL

, forceLeftTreeE

, benchS

, benchO

, benchL

, benchE

, benchE'

, fullTree

, eatFullTree

]Extra test cases

whnfTreeE :: TestTree

whnfTreeE = testCase "E-whnf" $ do

case partialTreeE of

Node _ _ _ -> pure () -- Succeed

Leaf -> error "unreachable"

whnfTreeQ :: TestTree

whnfTreeQ = expectFail $ testCase "Q-whnf" $ do

case partialTreeQ of

Node _ _ _ -> pure () -- Succeed

Leaf -> error "unreachable"

partialTreeQ :: Tree ()

partialTreeQ = runIdentity (unfoldM_BF_Q (Identity . partialTreeF) True)

bfGraph_L :: Int -> Tree Int

bfGraph_L = (`evalState` Set.empty) . unfoldM_BF_L visitGraph

testGraphL :: TestTree

testGraphL = testCase "L-graph" $

bfGraph_L 1 @?=

Node 1

(Node 2 Leaf

(Node 5 Leaf Leaf))

(Node 4 Leaf (Node 6 Leaf Leaf))

bfGraph_E :: Int -> Tree Int

bfGraph_E = (`evalState` Set.empty) . unfoldM_BF_E visitGraph

testGraphE :: TestTree

testGraphE = testCase "E-graph" $

bfGraph_E 1 @?=

Node 1

(Node 2 Leaf

(Node 5 Leaf Leaf))

(Node 4 Leaf (Node 6 Leaf Leaf))Note that

tasty-benchalso reports memory statistics (allocated, copied, and peak memory) when certain RTS options are enabled, which I’ve done by compiling the test executable with-with-rtsopts=-T.↩︎- The tree-traversals library by Noah Easterly.

- Algebras for weighted search, by Donnacha Oisín Kidney and Nicolas Wu, in ICFP 2021.

- Breadth-first traversal via staging, by Jeremy Gibbons, Donnacha Oisín Kidney, Tom Shrijvers, and Nicolas Wu, in MPC 2022. It has been revised into a short version (5 pages): Phases in software architecture in FUNARCH 2023.

Speaking of variants of free monads, one might think of the “freer” monad, which has different motivations and which does not help us here.↩︎